Cybersecurity projects are often balked at because businesses rarely realize the value of their data. For many organizations, data is something that accumulated over years of operations but is seldom looked at as an asset. So, getting approval for a cybersecurity project can be difficult. It is hard for decision-makers to understand the actual value of their data assets. This article addresses an approach in fair valuation that helps contextualize the cost of a cybersecurity project against the value of the data it is protecting.

By Ray K. Ragan, MPM, PMP, ITIL, AgilePro

Examining the value of data seems like a subjective task full of intangible considerations. The mid2000s changed that when the U.S. markets saw several commercial data breaches. History, with its sense of irony, now provides an auditable asset valuation of data from the liabilities sheets of these breached companies. There are many commercial data breaches that resulted in settlements, which can establish a par value of data.[1]

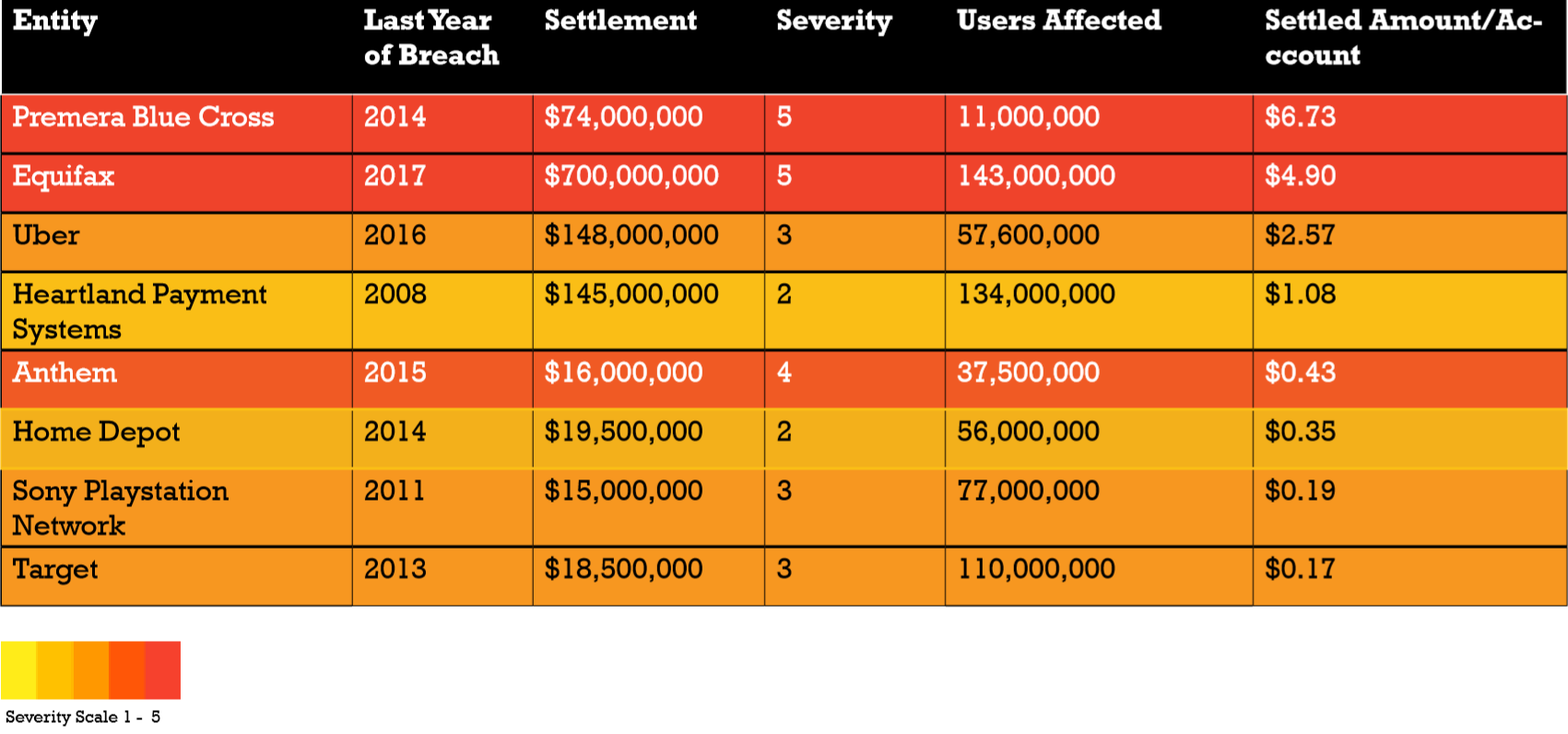

Reviewing commercial data breach settlements from 2008 to 2017 where the settlement, along with the number and disposition of compromised accounts, is publicly available is key for establishing the lower limit of data value.[2] These breaches are then qualified on severity based on the nature of data involved in the compromise. In this nine-year period of consideration, there are eight commercial data breaches that meet these criteria.

Types of data breaches, by severity

For the purposes of this value exercise, the severity is assessed on a numerical scale. A Severity 1 data compromise is data that can be reasonably changed by the victim and includes limited personally identifiable information (PII) with no financial information (e.g. usernames and passwords). A Severity 5 data compromise is data that is immutable and/or contains financial information that could reasonably cause a high degree of harm (e.g. health records and detailed financial records).

These eight commercial data breaches range between $0.17 and $6.73 per compromised account. Target’s Severity 2 breach represents the lower limit and Premera Blue Cross Severity 5 represents the upper limit. Incidentally, Equifax’s notorious 2017 data breach was only US$4.90 per account, suggesting settlements place a premium on health record protections over financial records. It is important to note the settlements may be the sum of damages and punitive considerations. This distorts the valuation but still serves a purpose in bracketing the value.[3]

This information allows an organization to establish a minimum value of their data. To do this, an organization must determine the nature of their data and which severity group best aligns. For instance, if the organization is a bank or credit union, using Severity 3 might be sensible depending on how detailed the customer data is. In this class, the average is $0.97 per customer record, based on data from data breach settlements.

This valuation is only of the data at rest and not being actively used in the interest of the organization. The data is static. Now an organization must consider this data in its ideal state of use. If the data is used in its optimal state of utility, what would the fully realized value be? This seems to be an exercise in intangibles, but organizations often know more about the value of their data than it may seem.

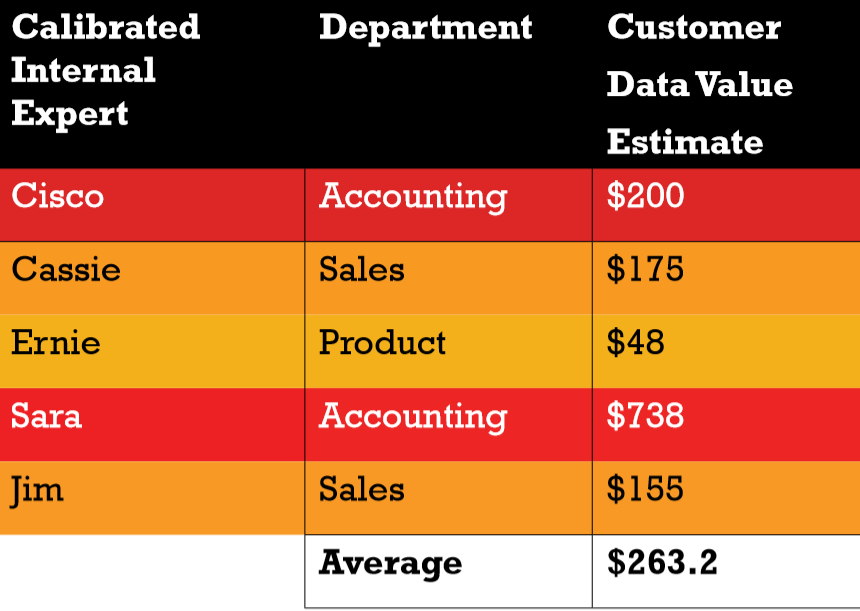

Using the methodology described in the seminal book, “How to Measure Anything,” a fair estimate range of data utility value can be determined. This is done by applying the Rule of Five. Sampling as little as five calibrated subject experts on what the ideal use of the organization’s data is will yield a usable bracket of data value with a confidence interval above 90% that the real value lies in the range of answers.[4] If a calibrated expert provides an answer far outside the range it produces, it should be considered an outlier.[5]

In the example presented in the table below, answers from our fictional experts range between $48 – $738 per year, with Sara from Accounting providing $738 based on the current most profitable customers. We like Sara, she uses data. These estimates are based on the organizational assumption that the data is 50% of the revenue and the organizations’ operations account for the other 50% of value. Some organizations may put a higher premium on the data, but this approach allows for a starting point in estimating.

Going back to the bank example, this is now iteration two on establishing value. The settlement data provides what is the lower-class value for static data. The input of the internal experts provides what will likely be an upper-class of the value of the organization’s data. Now, add a third input to the valuation of an organization’s data that is auditable and repeatedly verifiable by another. This is done by reviewing how the organization currently obtains value from its data – usually in terms of customer revenue.

Ask most people in an organization what the average customer revenue is, and likely answers will vary from blank stares to philosophical debates. This data is easier to work with in terms of estimating value, as it is directly derived from the data on hand. Remember Sara from Accounting, hero of the expert estimation. Ask her what the average annual revenue of a customer is. This establishes an auditable estimate of data utility value. In this notional example, it is $52.01.

These data sources provide a composite of what the organization’s data value is. The data breach settlements provide a static value of similar data while the expert estimation and the values from Accounting provide the real data utility value of an organization’s data value. Staying with the banking example and using Severity 3 data, the data’s value is a range between $0.97 and $738. If this bank has 500k customers, that means their static data is worth about $485,000, while the utility value is likely between $26 and $369 million. It is unsurprising that the utility value of data is worth much more to an organization than the static value. After all, data’s value is derived from its utility.

There are more sophisticated approaches for estimating the value of data like using parametric estimating. Parametric estimating allows more control in estimating. For example, in Sara’s estimate, $738 will only represent a small portion of customers, say 15%, even under the best conditions. In parametric estimating, the calibrated internal subject experts will break down the spread of customer revenue and establish ranges for each that can be combined for a whole. This way it can create a more accurate picture of the value.

Regardless of approach, this organization took an important step in understanding that data has value. Presenting this information in a cybersecurity project business case makes the conversation much easier. Now decision-makers can see the value of the data asset and how the project costs relate to protecting that asset. This moves the conversation to a simple ROI discussion: a $400,000 project to secure $26 million in data assets makes the cybersecurity project seem like a bargain. Not to mention it can help an organization avoid the painful cost of data breach remediation, but that is a different valuation.

References:

- Pietsch, Bryan, “Largest U.S. Data Breach Settlements with Government Include 3 Insurers,” Insurers Journal, July 23, 2019. Retrieved January 13, 2020, web address: https://www.insurancejournal.com/ news/national/2019/07/23/533657.htm.

- Armerding, Taylor, “The 18 Biggest Data Breaches of the 21st Century,” CSO Online, December 20, 2018. Retrieved January 13, 2020, web address: https://www.csoonline.com/article/2130877/the-biggest-data-breaches-of-the-21st-century.html.

- Ibid.

- Hubbard, Douglas, W., How to Measure Anything, May 2007, pp. 210 – 214. More broadly, Part III, Measurement Methods.

- Ibid, pp. 204-210.

This story first appeared in the June 2020 issue of CISO MAG. Subscribe to CISO MAG

About the Author

Ray K. Ragan is an expert in leveraging technology for mass communication and collaboration in both military and civilian environments. He successfully changed state law to give Veterans parity in small business, mobilized voters to unseat an incumbent, and as a Service member, engaged the enemy in the public information space. Ray holds a Master’s degree in Administration (M.Ad) from Northern Arizona University with an emphasis in Project Management and a Certificate in Strategic Decision and Risk from Stanford University.

Ray K. Ragan is an expert in leveraging technology for mass communication and collaboration in both military and civilian environments. He successfully changed state law to give Veterans parity in small business, mobilized voters to unseat an incumbent, and as a Service member, engaged the enemy in the public information space. Ray holds a Master’s degree in Administration (M.Ad) from Northern Arizona University with an emphasis in Project Management and a Certificate in Strategic Decision and Risk from Stanford University.

Disclaimer

Views expressed in this article are personal. The facts, opinions, and language in the article do not reflect the views of CISO MAG and CISO MAG does not assume any responsibility or liability for the same.