AI and ML present a new dawn in the cybersecurity industry. AI is not a new concept to computing. It was defined in 1956 as the ability of computers to perform tasks that were characteristic of human intelligence. Such tasks included learning, making decisions, solving problems, and understanding and recognizing speech. ML is a broad term referring to the ability of computers to acquire new knowledge without human intervention. ML is a subset of AI and can take many forms, such as deep learning, reinforcement learning, and Bayesian networks. AI is poised to disrupt the cybersecurity space in many ways in what might be the ultimate win for the cybersecurity industry against cybercriminals.

By Dr. Erdal Ozkaya, MD & Regional CISO of a Global Bank

AI/ML in cybersecurity involves deploying self-sufficient tools that can detect, stop, or prevent threats without any human intervention. The detection of threats is done based on the training that the algorithm in the security tool will have undertaken on its own, and the data already supplied by the developers. Therefore, throughout its life cycle, an AI-powered security tool will become better at detecting threats. The original dataset of threats provided by developers will provide a reference base that it can use to know what is normal and what is malicious. The security tool will then be exposed to insecure environments before final deployment. In the environments filled with threats, the system will continually learn based on the threats that it detects or stops. Hacking attempts will also be directed at it. These attempts will involve hacking or attempts to overwhelm its processing capabilities with lots of malicious traffic. The tool will learn the most commonly used hacking techniques for breaching systems or networks. For instance, it will detect the use of password-cracking tools such as Aircrack-ng on wireless networks. Similarly, it will detect brute-force attacks on login interfaces. The main role that will be played by humans in cybersecurity will be to update the algorithms of the AI tools with more capabilities.

AI security systems will possibly contain all threats. Conventional security systems are usually unable to detect threats that exploit zero-day vulnerabilities. With AI, even after evolving and adapting new attack patterns, malware will not be able to penetrate the AI system. The system will check the code being run by the malware and predict the outcome. Outcomes that are deemed to be harmful will cause the AI system to prevent the program from executing. Even if the malware obfuscates its code, the AI system will keep tabs on the execution pattern. It will be able to stop the program from executing once it attempts to carry out malicious functions such as making modifications to sensitive data or the operating system.

It is already projected that AI will overtake human intelligence. Therefore, a foreseeable point in the future will see all cybersecurity roles moved from humans to AI systems. This is both advantageous and disadvantageous. Today, when an AI system fails, the results are normally tolerable. This is because the scope of operations played by AI systems is still limited. However, when AI finally overtakes human intelligence, the results of a failure in the systems might be intolerable. Since the security systems will be better than humans, it is possible that they will be in a position to refuse input from humans. A malfunctioning system might, therefore, continue operating without any interventions. The perfectionist nature of AI will be both good and bad. Current security systems work toward reducing the number of attacks that can succeed against a system. However, AI systems work toward eliminating all threats. Therefore, false-positive detection might not be considered as such; they might be treated as positive detection and thus cause disruptions in the affected harmless systems that are stopped from executing.

Lastly, there are fears that the integration of ML and AI into cybersecurity might lead to more harm than good. As has been observed over the years, attackers are resilient. They will always try to find ways to beat a cybersecurity system. Normal cybersecurity tools are beaten using more sophisticated methods than the tools are aware of. However, the only way to beat AI will be to confuse it. Therefore, threat actors might infiltrate AI training systems and provide bad datasets, thus affecting the knowledge acquired by the AI-backed security systems. The actors might also create their own adversarial AI system to even the playing field. This would result in an AI versus AI battle.

Lastly, hackers might still use methods that circumvent AI security systems. Social engineering can still be carried out physically. In such cases, AI systems will not be able to help the target. Shoulder surfing—the simple act of looking over someone’s shoulder as they enter crucial details—is also conducted without the use of hacking tools. This also circumvents the security system. Therefore, AI and ML might not be the ultimate answer to cybercrime.

This article has looked at the evolution of cybersecurity from legacy to advanced and then on to futuristic technologies such as AI and ML. It has been explained that the first cybersecurity system was an antivirus system that was created to stop the first worm. Cybersecurity then followed this example, where security tools were created as responses to threats. Legacy security systems started the approach of using signature-based detection. This is where security tools would be loaded with signatures of common malware and use this knowledge base to detect and stop any program that matched the signature. However, the security systems were focused on malware, and thus, hackers focused on breaching organizations through the network. In 1970, an OS company was breached via its network and a copy of an OS was stolen. In 1990, the US military suffered a similar attack where a hacker broke into 97 computers and corrupted them. Therefore, the cybersecurity industry came up with stronger network security tools. However, these tools still used the signature-based approach and thus could not be trusted to keep all attacks at bay.

In the 2000s, the cybersecurity industry came up with a new concept of security where it advised organizations to have layered security. Therefore, they had to have security systems for securing networks, computers, and data. However, layered security was quite expensive, yet some threat vectors were still infiltrating computers and networks. By 2010, cybercriminals started using threats called advanced persistent threats. Attackers were no longer doing hit-and-run attacks; they were infiltrating networks and staying hidden in the networks while carrying out malicious activities. In addition to this, phishing was revolutionized and made more effective. Lastly, there was another development where attackers were using DoS attacks to overwhelm the capabilities of servers and firewalls. Since many companies were being forced out of business by these attacks, the cybersecurity industry developed a new approach to security, known as cyber resilience. Instead of focusing on how to secure the organization during attacks, they ensured that organizations could survive the attacks. In addition to this, users became more involved in cybersecurity where organizations started focusing on training them to avoid common threats. This marked the end of security 1.0.

The cybersecurity industry then moved to the current “security 2.0”, where it finally created an alternative to signature-based security systems. Anomaly-based security systems were introduced and they came with more efficiencies and capabilities than signature-based systems. Anomaly-based systems detect attacks by checking normal patterns or behaviors against anomalies. Apps and traffic that conform to the normal patterns and behaviors are allowed to execute or pass, while those that do not are stopped. While anomaly-based tools are effective, they rely on decisions from humans. Therefore, a lot of work still comes back to IT security admins. The answer to this has been to leverage AI with the hopes that such security systems will become self-sufficient.

AI sounds promising, though many doubts have been cast against it. AI and ML security tools will operate by detecting threats based on anomalies and taking informed decisions on how to handle these threats. The AI-security tools will have a learning module that will ensure that they only get better with time. Before deployment, these systems will be extensively trained using datasets and real environments that have real threats. Once the learning module is able to provide sufficient information to protect an organization from common threats, it will be deployed. One of the main advantages of AI security systems is that they will evolve along with the threats. Any new threats will be studied and thwarted. Despite the advantages of AI-powered security systems, there are worries that they may ultimately become harmful. As AI overtakes human intelligence, there might come a point where such tools will not accept any human input. There are also worries that attackers might poison the algorithms to make them harmful. Therefore, the future of AI in cybersecurity is not easy to foretell, but there should be two main outcomes: either AI-backed security systems will finally contain cybercrime, or AI systems will go rogue, or be made to go rogue and become cyber threats.

Artificial Intelligence and Cybersecurity

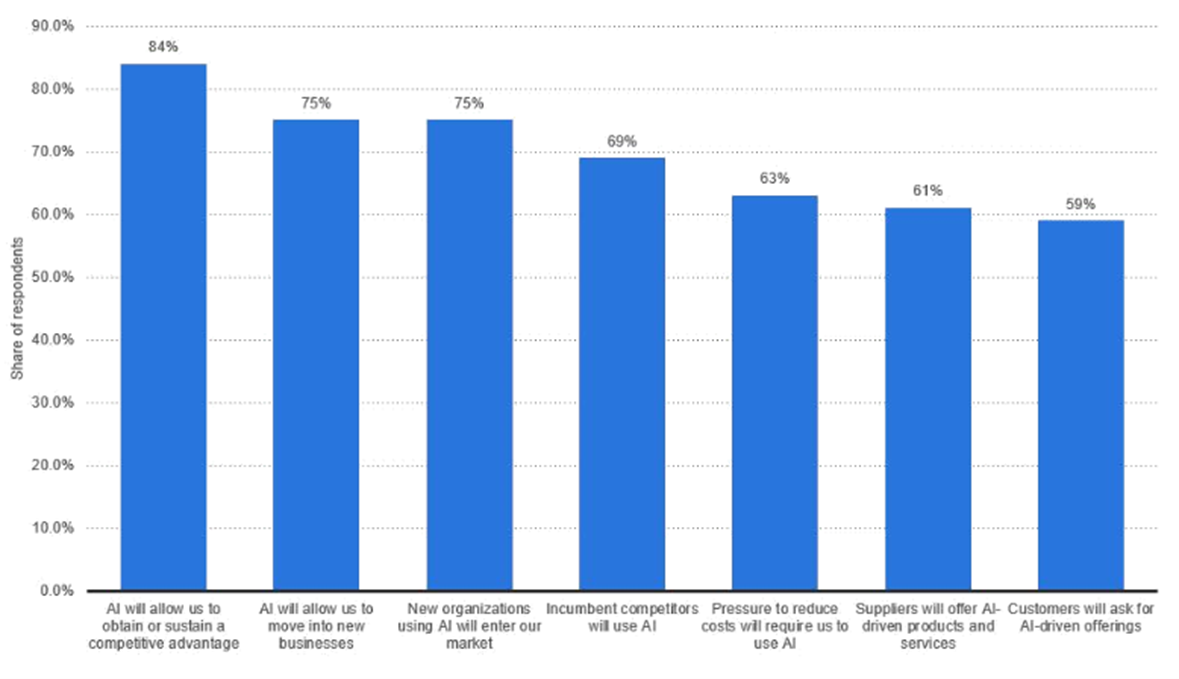

Enterprise customers around the world are investing in Artificial Intelligence and automation to improve their business processes, reinvent productivity, and improve operational excellence. Banks are looking into new ways of how to implement fraud detection in their ATM networks, insurance companies are exploring how to use Artificial Intelligence to predict the profitability of their services to the end customers, and brokers have started to apply Artificial Intelligence to predict stock market movements. The following diagram illustrates the reasons why business organizations are adopting worldwide Artificial Intelligence as of 2019:

Artificial Intelligence-powered cybersecurity

Almost all security vendors currently advertise that their technology has some sort of Artificial Intelligence. However, Artificial Intelligence comes in many variations and there are many underlying technologies. You will want to watch out for buzzwords that have been placed by marketing departments. It is not always clear what these security vendors are specifically doing with Artificial Intelligence, Machine Learning, and so on.

Building a security solution that is powered by Artificial Intelligence is challenging and requires investments. The costs include building the fundamental systems that are required to operate the technology, additional costs that are required for scaling the system in a hyperscale environment, and, lastly, there is a very limited pool of talents available in the market that have sufficient experience in working on Artificial Intelligence code and who are able to handle complex mathematical principles to create an efficient and scalable solution. Even if some companies can invest in the infrastructure and are able to hire these talents, Artificial Intelligence requires data—a lot of data to train the Artificial Intelligence. There are only a few companies in the world who actually have that amount of data. These companies need to have in-depth knowledge and data on the threat landscape, on digital identities, email accounts, web presence, and telemetry coming from endpoints and mobile devices. With that, companies like Apple, Google, Microsoft, Amazon, and Facebook have a clear advantage.

It is clear that Artificial Intelligence-powered security solutions will assist cybersecurity teams in many stages of defense. Narrow AI could be used to perform simple tasks such as searching for a specific Indicator of Compromise (IOC) in a threat intelligence database, all the way up to a super AI being self-aware and not only alerting the Security Operations Center (SOC) when it detects a cybercriminal trying to breach the environment, but also automatically adjust preventative security controls to prevent the breach from happening in the first place. Without any doubt, Artificial Intelligence-based security solutions will offer intelligent recommendations to the cybersecurity teams. The following screenshot illustrates the artificial intelligence-based security automation from the Microsoft Defender ATP solution:

Use Cases

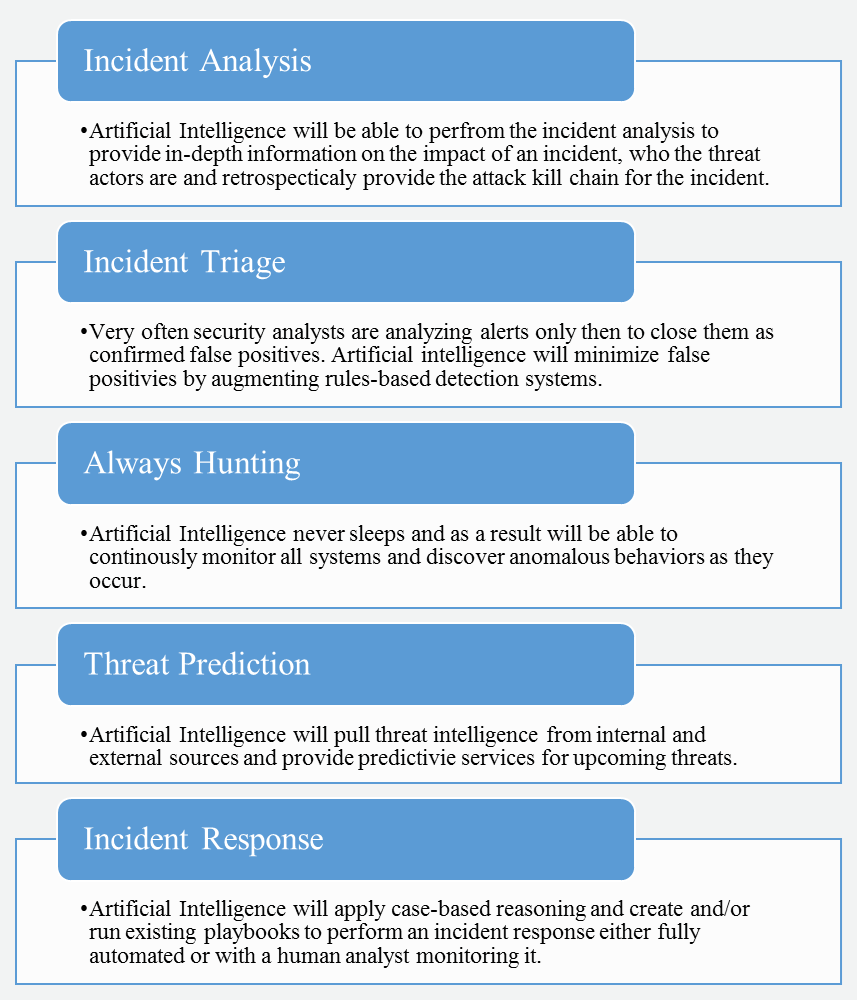

There are five use cases that you will want to enable through Artificial Intelligence to improve your cyber hygiene and operational excellence, all of which are shown in the following diagram:

All of these use cases are fairly new and yet their full potential hasn’t been discovered by any security vendor. It is clear, however, that the benefits of Artificial Intelligence to fight cybercrime is critical and that security vendors are investing.

In summary, in this article we covered that Artificial Intelligence is not just Artificial Intelligence — there are many different technologies, use cases, and scenarios to take into account too. It is important to deeply understand what Artificial Intelligence is before jumping on the next call with the sales representative of a security vendor that tries to sell the world’s first Artificial Intelligence-based security solution. You are now able to ask smart questions such as is the Artificial Intelligence a Narrow AI or True AI capability? and when you say Machine Learning, is it supervised Machine Learning, Unsupervised Machine Learning, or semi-supervised Machine Learning? The key is not to get fooled and understand how technology can help you protect, detect, and respond against the ever-changing threat landscape. You will want to make sure that technology helps you to truly discover and remediate cyber-attacks as quickly as possible. The following diagram illustrates a project from MIT, of an Artificial Intelligence-based cybersecurity system that can detect 85% of cyber-attacks. However, this is only the beginning:

About the Author

Dr. Erda Ozkaya is a tenured cybersecurity professional and has juggled the roles of a security advisor, speaker, lecturer, and author. Having excelled in business development, management and academics focused on securing cyberspace, he is passionate about imparting knowledge from his hands-on experiences.

Dr. Erda Ozkaya is a tenured cybersecurity professional and has juggled the roles of a security advisor, speaker, lecturer, and author. Having excelled in business development, management and academics focused on securing cyberspace, he is passionate about imparting knowledge from his hands-on experiences.

As an award-winning technical expert, Dr. Erda has received many accolades. His recent awards are the Cyber Security Professional of the year MEA, Hall of Fame by CISO Magazine, Cybersecurity Influencer of the year (2019), Microsoft Circle of Excellence Platinum Club (2017), NATO Center of Excellence (2016) Security Professional of the year by MEA Channel Magazine (2015), Professional of the year Sydney (2014) and many speakers of the year awards in conferences. He also holds Global Instructor of the year awards from EC-Council & Microsoft.

Dr. Erdal has the following qualifications: Doctor of Philosophy in Cybersecurity, Master of Computing Research, Master of Information Systems Security, Bachelor of Information Technology, Microsoft Certified Trainer, Microsoft Certified Learning Consultant, ISO27001 Auditor & Implementer, Certified Ethical Hacker (CEH), Certified Ethical Instructor & Licensed Penetration Tester. He has also been a part-time lecturer at Australian Charles Sturt University and has co-authored many cybersecurity books, as well as security certification course-ware and examinations.

Disclaimer

CISO MAG did not evaluate the advertised/mentioned products, service, or company info, nor does it endorse any of the claims made by the advertisement/writer. The facts, opinions, and language in the articles do not reflect the views of CISO MAG and CISO MAG does not assume any responsibility or liability for the same. Views are personal.